- Home

- India

- World

- Premium

- THE FEDERAL SPECIAL

- Analysis

- States

- Perspective

- Videos

- Sports

- Education

- Entertainment

- Elections

- Features

- Health

- Business

- Series

- In memoriam: Sheikh Mujibur Rahman

- Bishnoi's Men

- NEET TANGLE

- Economy Series

- Earth Day

- Kashmir’s Frozen Turbulence

- India@75

- The legend of Ramjanmabhoomi

- Liberalisation@30

- How to tame a dragon

- Celebrating biodiversity

- Farm Matters

- 50 days of solitude

- Bringing Migrants Home

- Budget 2020

- Jharkhand Votes

- The Federal Investigates

- The Federal Impact

- Vanishing Sand

- Gandhi @ 150

- Andhra Today

- Field report

- Operation Gulmarg

- Pandemic @1 Mn in India

- The Federal Year-End

- The Zero Year

- Science

- Brand studio

- Newsletter

- Elections 2024

- Events

- Home

- IndiaIndia

- World

- Analysis

- StatesStates

- PerspectivePerspective

- VideosVideos

- Sports

- Education

- Entertainment

- ElectionsElections

- Features

- Health

- BusinessBusiness

- Premium

- Loading...

Premium - Events

AI isn't unbiased because humans are biased

“Machine Bias”, screamed the headlines. The tagline said, “There’s software used across the country to predict future criminals. And it’s biased against blacks.” In a revealing exposé in 2016, ProPublica, a US-based Pulitzer Prize-winning non-profit news organisation, analysed the software known as COMPAS used by US courts and police to forecast which criminals...

“Machine Bias”, screamed the headlines. The tagline said, “There’s software used across the country to predict future criminals. And it’s biased against blacks.”

In a revealing exposé in 2016, ProPublica, a US-based Pulitzer Prize-winning non-profit news organisation, analysed the software known as COMPAS used by US courts and police to forecast which criminals are most likely to re-offend, and found it biased against Afro-Americans.

Guided by inputs from an algorithm, police and judges in America made decisions on defendants and convicts, determining everything from bail amounts to sentences. The report concluded that COMPAS software was twice as likely to falsely label black defendants as future criminals than white defendants.

Further, it was more likely to falsely label white defendants as low risk. In other words, more supposedly high-risk black defendants did not commit crimes. In contrast, more supposedly low-risk white defendants did commit crimes. The allegedly unbiased algorithms were definitely unfair.

How come algorithms are biased?

To deal with the messy world steeped in various biases, big-data-driven AI-enabled algorithms were seen as a panacea. Cold computations, it was assumed, would be fairer than prejudiced human judgements. From the advertisement the Facebook pushes in to the loans we qualify, algorithms increasingly control the kinds of experiences we have.

As algorithms take over, many tasks, from targeted advertisements to sorting your CV for employment, the messy interface between computing power and human activity is becoming visible.

Also read | Artificial intelligence helps identify bat species suspected of carrying virus

The wake-up alarm was sounded when Cathy O’Neil, a data scientist, documented the chilling instances of ingrained biases in society tainting the supposedly fair and impartial algorithms in her now-famous cautionary best-seller Weapons of Math Destruction.

The recent incident of undue influence on voters by the Facebook–Cambridge Analytica data scandal harvesting personal-data of millions of people’s Facebook profiles, without the consent of the users, is a case point. The illegal data was used for partisan political advertising, seriously undermining representative democracy.

Democratic societies can no longer afford to naively believe machine learning and Artificial Intelligence are ipso facto objective and fair. Oversight over opaque AI-enabled Data-Driven Decision Systems is imperative, failing which prejudices such as racism, xenophobia, sexism or ageism could be perpetuated.

Everything has a history

Unlike humans, computers do not have subjective views on, for example, caste, religion, race, gender and sexuality. Then how come algorithms are often found to be biased?

Our past actions have to be blamed, says Dr Deepak Padmanabhan, a researcher from the School of Electronics, Electrical Engineering and Computer Science Queen’s University Belfast and an adjunct faculty at the Indian Institute of Technology, Madras.

“If everyone associates the colour blue to boys and pink to girls, then an AI tasked to select toys for boys will select blue toys only because, in this era of machine learning, the AI reads from the data available to it,” Padmanabhan explains.

AI learns from past human behaviour, and the social biases inherent in our historical practice automatically makes AI unfair too.

Also read | ‘Alexa, check my heart’: AI tool to detect cardiac arrest during sleep

Often historical data is embedded with biases prevalent in the past. Obviously, a machine learning AI algorithm trained in this environment would reflect more or less the same prejudices. For example, we know that the reason people are hired and promoted is not on pure meritocracy. When such a data set of recruitment and promotion are used to find traits among new applicants, marginal communities discriminated against in the past will stand disadvantaged.

“Machine learning algorithms learn from the real-world data created by humans (or processes involving humans), and thus would have within them, entrenched biases such as prejudices and reflections of historical and current discrimination. Typical AI algorithms are focused on learning (i.e., ‘abstracting’) high-level trends within the data and using them for such tasks; this design itself could amplify the discrimination embedded within the data since disadvantaged groups of people are unlikely to be part of mainstream behaviour and are thus likely to be left out of the learnt high-level trends,” cautions Padmanabhan.

Teaching, tweaking AI to be fair

With regular reports of high-profile incidents of AI systems going haywire, the topic of bias and fairness in algorithms are getting serious attention in the AI community.

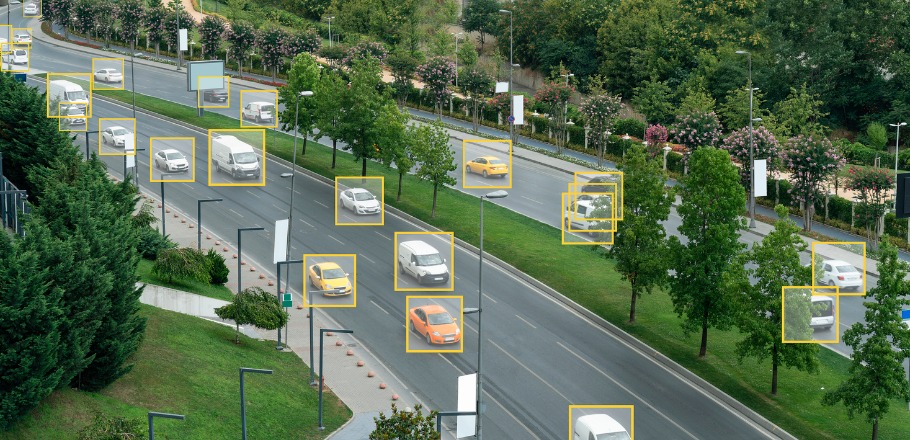

Given the influence of algorithms from credit rating to surveillance profiling, rigorous auditing of algorithms has emerged as one method of detecting if an algorithm is fair.

For example, an algorithm for job selection evaluates various factors including age, educational qualification and experience to determine a suitable candidate. Suppose, we change one variable, say the sex of the selected candidate and the system does not select that person, then we know the algorithm is biased. We can ask the algorithm ‘what if’ questions to audit the fairness of the algorithm and ferret out biases.

Another approach takes recourse to self-governing algorithms, called shadow algorithms, to monitor the AI for the biases. In some cases, the decisions are actually judgements (like finding the ‘best’ job applicant) and appear as ‘biases’. These cannot be wished away. The only solution is to make the biases more transparent and make public, argue some developers.

IIT Madras makes headway

Recently Indian Railways received 4.7 million applications for the advertised post of 26,502 assistant loco pilots and technicians. Imagine screening each of these applications manually. A near impossibility.

AI and algorithms cannot be dispensed away. Researchers in IIT Madras, Savitha Abraham and Sowmya Sundaram along with Dr Padmanabhan have developed a fairer technique using the principle of ‘representational parity’. The new technique is particularly useful when AI technologies are to deal with massive data such as an overwhelming number of job applicants.

The new technique attempts to cure what is known as clustering biases. When colossal data is to be sorted and sifted, the data are sorted and grouped into clusters of data with common characteristics. Once a manageable number of clusters are made, the organisation can either shortlist or reject an entire group.

However, the “AI techniques for data processing, known as clustering algorithms, are often criticised as being biased in terms of ‘sensitive attributes’ such as race, gender, age, religion and country of origin”, points out Dr Padmanabhan.

Representational parity principle attempts to ensure the proportion of specific groups in the dataset is reflected in the ‘chosen subset’ (or any cluster). For example, if one considers the attribute of gender, ‘representational parity’ calls for the same gender ratio, as in the dataset, within every grouping or cluster, say shortlisted candidates.

There was a catch until now; the algorithms could provide representational parity for a single attribute but not ensure ‘representational parity’ over a broad set of sensitive attributes. The team led by Dr Padmanabhan has come up with algorithmic machinery that can encompass representational parity of many traits.

“Our unique contribution in this research is that of providing the algorithmic machinery to which may include some or all of gender, ethnicity, nationality, religion and even age and relationship status in many settings. It is indeed necessary to ensure fairness over a plurality of such ‘sensitive’ attributes in several contexts, so AI methods are better aligned to democratic values,” Dr Padmanabhan explains.

The improved technique can deal with ‘multi-valued’ unfairness (like race) or numerical (like age) simultaneously.

“Different researchers take different approaches towards this broader goal of making sure that AI is more aligned to modern democratic values. While we do not target to explicitly handle the bias in data collection, our focus is on using ‘representational parity’ as the paradigm towards mitigating biases within the results of clustering,” he says.

“While our algorithm cannot ‘correct’ prejudices and discrimination within the data, what it targets is to ensure that reflections of such biases and discrimination are mitigated as much as possible, within the results,” says Padmanaban.

Savitha, a member of the research team, reports that in countries like India with drastic social and economic disparities, “employing AI techniques directly on raw data results in biased insights, which influence public policy, and this could amplify existing disparities. The uptake of fairer AI methods is critical, especially in the public sector, when it comes to such scenarios.”

In making predictions based on generalised statistics, not on someone’s individual situation, the AI predictions can be way off the mark. As is the case with any human activity, algorithms will also have biases. Yet the tool offers us a much wiser and fairer decision if fairness and transparency in Data-Driven Decision Systems are examined continuously.

(The author is a science communicator with Vigyan Prasar, New Delhi)