- Home

- India

- World

- Premium

- THE FEDERAL SPECIAL

- Analysis

- States

- Perspective

- Videos

- Sports

- Education

- Entertainment

- Elections

- Features

- Health

- Business

- Series

- In memoriam: Sheikh Mujibur Rahman

- Bishnoi's Men

- NEET TANGLE

- Economy Series

- Earth Day

- Kashmir’s Frozen Turbulence

- India@75

- The legend of Ramjanmabhoomi

- Liberalisation@30

- How to tame a dragon

- Celebrating biodiversity

- Farm Matters

- 50 days of solitude

- Bringing Migrants Home

- Budget 2020

- Jharkhand Votes

- The Federal Investigates

- The Federal Impact

- Vanishing Sand

- Gandhi @ 150

- Andhra Today

- Field report

- Operation Gulmarg

- Pandemic @1 Mn in India

- The Federal Year-End

- The Zero Year

- Science

- Brand studio

- Newsletter

- Elections 2024

- Events

- Home

- IndiaIndia

- World

- Analysis

- StatesStates

- PerspectivePerspective

- VideosVideos

- Sports

- Education

- Entertainment

- ElectionsElections

- Features

- Health

- BusinessBusiness

- Premium

- Loading...

Premium - Events

Chennai police’s use of facial recognition technology can do more harm than good

India unfortunately doesn’t have a privacy protection law as many democracies do. Digital technologies are double-edged swords. It is more likely that the design and use of data and digital technologies can potentially be intrusive and erode human rights. Traditional police watch-towers and other surveillance methods had only a limited reach and were primarily based on immediate needs. But...

India unfortunately doesn’t have a privacy protection law as many democracies do. Digital technologies are double-edged swords. It is more likely that the design and use of data and digital technologies can potentially be intrusive and erode human rights.

Traditional police watch-towers and other surveillance methods had only a limited reach and were primarily based on immediate needs. But in an age when high-tech cubicles in police headquarters can replace the watch-towers and conventional intelligence gathering, a democracy can degenerate into a virtual surveillance society where surveillance gets universalised.

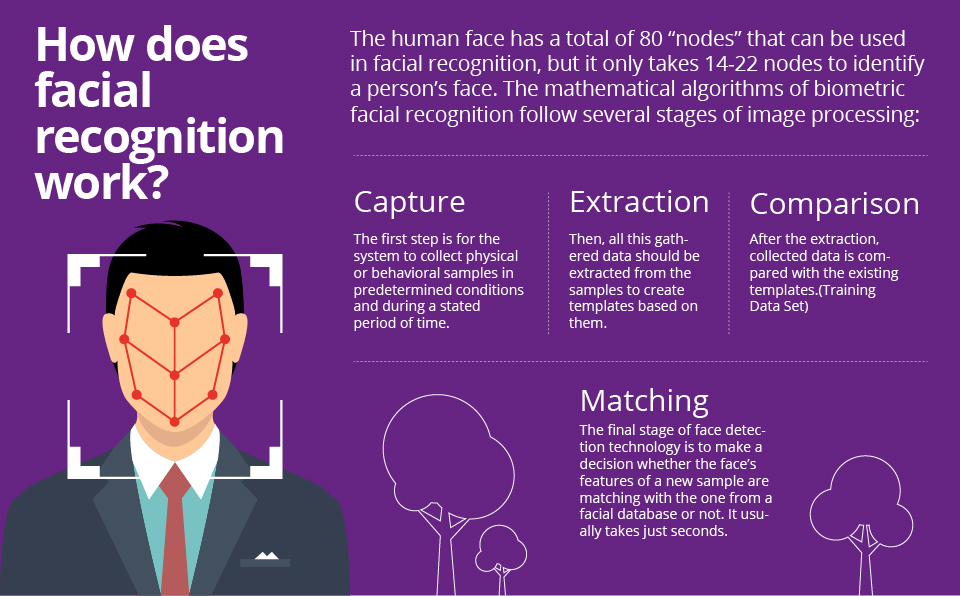

In India, the Chennai Police are at the forefront of this highly controversial venture. They have been using FACETAGR technology, which involves an AI-based face-recognition app that can be used in CCTV cameras as well as smartphones. Faces within a crowd can be compared in real time with facial features of criminals and other wanted persons available in the police database.

However, to track the movements of criminals 24X7, the database would include not only ‘criminals’ but almost all citizens so that the identity and address of a suspect caught on a CCTV camera or a smartphone can be instantaneously traced. In the bargain, the facial features of every Indian would get reduced to “data” and go into the hands of the police.

Why this sounds dangerous

With access to such data, the police, if they want, can track almost each and every movement of citizens — which flight, train or bus they are taking and to where. To meet whom. The car they are driving. Who they are hanging out with and for how long. The places they are visiting etc. It’s not just the political participation of citizens or their business activities, but their social interactions and outings too will be watched by the big brother.

Imagine a scenario where all voter identity cards, Aadhaar cards, Census registration, driving licences, PDS cards, ESI or PF cards, PAN cards, credit and debit cards, as well as social media profiles and all school/ college I-cards are integrated with the FACETAGR database. Now imagine the effect of the police installing CCTV cameras at all airports, railway stations and bus stands and traffic junctions, malls, markets, theatres and public places. No one and no movement can escape the police’s eye.

This is like imagining a society where there is a ‘Hoover file’ on every citizen. The technology can establish the identity of a person in real time, or at the most, in a few seconds and can handle more than 3 million searches per second. That’s what Chennai is heading for.

In April 2018, Vijay Gnanadesikan, director of FACETAGR, said the technology was first used by the Chennai Police in T Nagar back in October 2017. Initially, the photos of 12,000 criminals had been uploaded. However, by April 2018, the database had photos and other records of 67,000 people from Tamil Nadu and Puducherry.

The Chennai police have reportedly decided to expand the use of its FACETAGR app to other southern states as well. This step, they claim, will help them curb the movement of inter-state criminals. The respective crime records bureaus of Andhra Pradesh, Telangana, Karnataka, Kerala and Puducherry have already provided the data of criminals. Soon, Chennai Police headquarters will become the hub of this new technology in southern India and help install the technology in other states as well.

Face recognition technology was first installed at the Chennai International Airport at Meenambakkam and was later extended to 10 out of 12 police districts. While 250 CCTV cameras were installed in T Nagar alone, they are being gradually extended to other areas. Extending beyond Chennai city, festivals involving mass gatherings like Pasumpon Muthuramalinga Thevar anniversary and Thiruvannamalai Deeepam festival are also being given face recognition coverage.

More than 1,000 police personnel have been deployed exclusively for this project and have been given smartphones with the FACETAGR app.

So, how is the performance so far?

Police authorities claim that this technology has helped nab about two dozen criminals and rescue some 100 missing children — something the police should have been able to do even without this technology. As the Tamil saying of “digging a mountain to catch a mouse” goes, compromising the privacy of all citizens is too big a price for this small achievement.

Here’s why.

According to several experts in the field, criminals can easily evade the technology by stuffing their mouths with some toffees that can give a contorted image to their chins. Worse, as the technology works by approximation, many innocents who have close resemblance to criminals can also be potentially targeted due to sheer technical glitches.

Around 80 countries in the world now have privacy laws. Even Iceland has such a strong privacy law that it refused any agreement with the US — its largest trading partner — to give access to personal data of its citizens.

In fact, in the US, where facial recognition was widely used, more and more cities are now taking a step back following sustained campaigns by civil liberty outfits, technologists as well as politicians. Somerville in Massachusetts became the second city in the country to ban facial recognition on June 27 this year after San Francisco did the same in May. Oakland is also considering a similar move while California lawmakers have moved for a statewide prohibition.

Many big tech companies are also realising the potential for abuse of this technology. In December last year, Google said it won’t sell its facial recognition technology until loopholes for abuse were closed.

The UK, too, has been facing widespread protests by rights activists against facial recognition. Liberal Democrats councillor Ed Bridges, as reported by the Independent, has mounted a legal challenge after the South Wales Police allegedly scanned his face at a peaceful anti-arms trade protest in 2018 and while doing his Christmas shopping in Cardiff earlier without his consent. The court is still hearing the case. While the UK uses this tech for immigrant profiling, no other police force in Europe is doing so.

However, China is expanding facial recognition at the rate of 5,00,000 face scans per month with greater focus on Uighurs for minority profiling, according to a report in The New York Times.

In India, unfortunately, no law, including the Information Technology Act 2000, addresses the issue of violation of privacy by police or any other private agency with the use of facial recognition technology. Nor are there any rules to regulate the use of such database of personal information of citizens — without their consent — except in the case of Aadhaar. The Supreme Court in its landmark Aadhaar judgment made several observations on citizens’ right to privacy that can be invoked to challenge the very legality of this controversial venture of Tamil Nadu Police. The matter, however, was not even discussed in the state Assembly before its launch.

Needless to say, no civilised society can afford to leave its freedom in the hands of its policemen. With Chennai Police going ahead unhindered with this massive surveillance plan, George Orwell’s 1984 no longer reads like a fiction.