- Home

- India

- World

- Premium

- THE FEDERAL SPECIAL

- Analysis

- States

- Perspective

- Videos

- Sports

- Education

- Entertainment

- Elections

- Features

- Health

- Business

- Series

- In memoriam: Sheikh Mujibur Rahman

- Bishnoi's Men

- NEET TANGLE

- Economy Series

- Earth Day

- Kashmir’s Frozen Turbulence

- India@75

- The legend of Ramjanmabhoomi

- Liberalisation@30

- How to tame a dragon

- Celebrating biodiversity

- Farm Matters

- 50 days of solitude

- Bringing Migrants Home

- Budget 2020

- Jharkhand Votes

- The Federal Investigates

- The Federal Impact

- Vanishing Sand

- Gandhi @ 150

- Andhra Today

- Field report

- Operation Gulmarg

- Pandemic @1 Mn in India

- The Federal Year-End

- The Zero Year

- Science

- Brand studio

- Newsletter

- Elections 2024

- Events

What’s common between your friends-family network and artificial neural networks

Imagine you are going out to dinner with your family. Should you eat pizza, Mughlai, or Chinese food? Of course, you will ask your partner, daughter, and younger son. One option is to side with the majority.But it is the teenage daughter's birthday, so it cannot be one person, one vote. Her request will be considered, but so will the opinions of others. You pick an option for the cuisine—it...

Imagine you are going out to dinner with your family. Should you eat pizza, Mughlai, or Chinese food? Of course, you will ask your partner, daughter, and younger son. One option is to side with the majority.

But it is the teenage daughter's birthday, so it cannot be one person, one vote. Her request will be considered, but so will the opinions of others. You pick an option for the cuisine—it is Mughlai.

Now, the issue is which restaurant to go to. Your son claims his friends have recommended a new eatery, but you wanted to be sure. You consult with three of your friends and ask for their feedback.

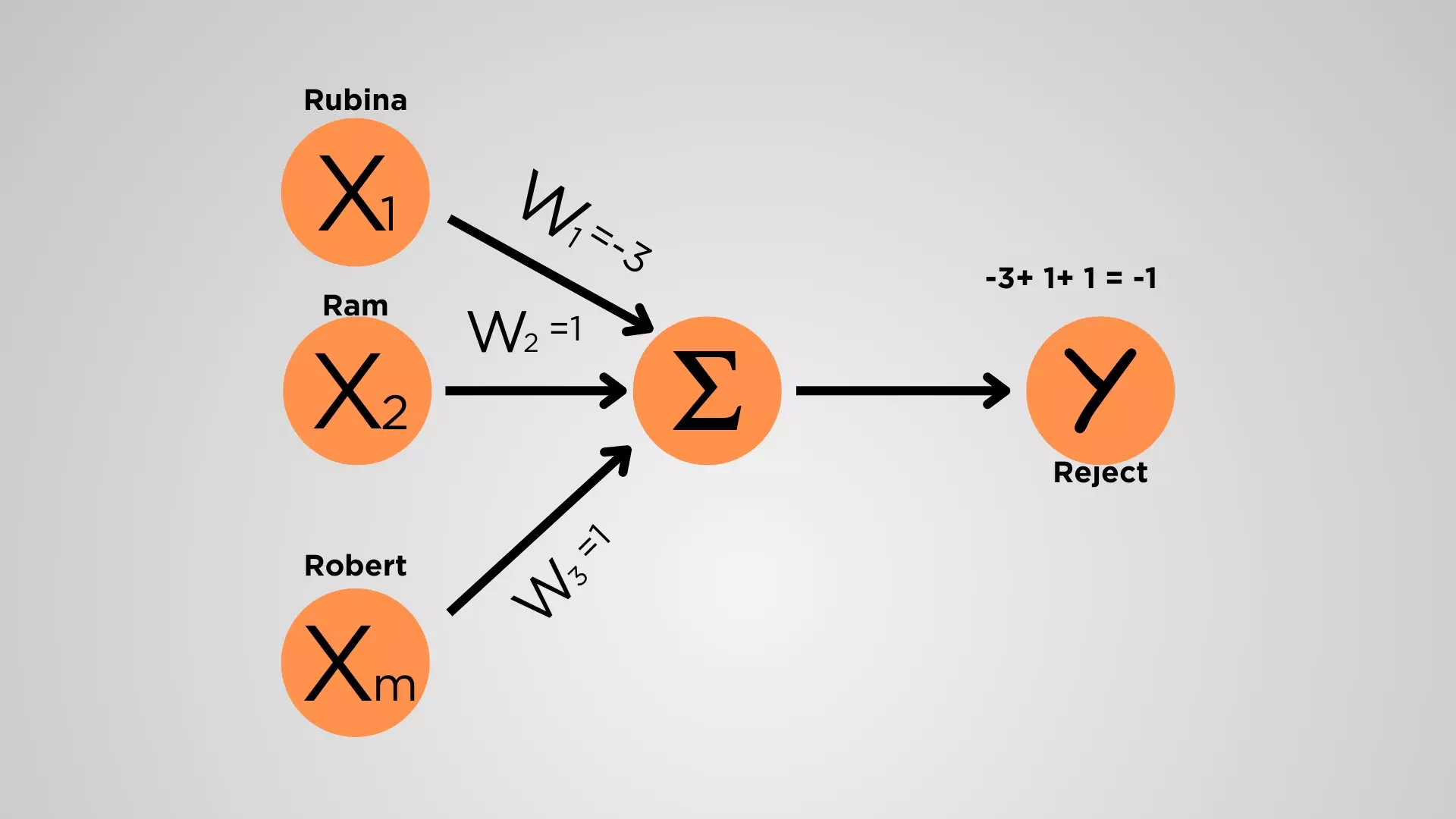

Rubina's aunt says 'no'; Grandfather Ram and Robert's uncle say 'yes'. It is one 'no' against two 'yeses'. You chose to go with the majority and accept your son's recommendation.

However, you may trust some friends more since they have extensive expertise in fine dining. For example, suppose Rubina aunty is a fine dining aficionado. In that case, her advice is valued threefold higher than grandpa Ram, who you know is an ignoramus when it comes to good eating. Rubina has three 'no' votes, while Ram and Robert have one 'yes'. You decide to avoid the eatery.

McCulloch-Pitts neuron model assumes a particular neuron 'fires' when the input exceed a threshold.

In 1943, neurophysiologist Warren McCulloch and logician Walter Pitts proposed that neurons function in this way in the human brain. This breakthrough neuron model, now known as the McCulloch-Pitts neuron, visualised neurons as logic gates and represented neural networks as digital circuits.

Researchers have developed deep neural network artificial intelligence algorithms based on this idea. John Hopfield at Princeton University in New Jersey and Geoffrey Hinton at the University of Toronto in Canada, who have made significant contributions to this field, have jointly been awarded the Nobel Prize in Physics 2024 “for foundational discoveries and inventions that enable machine learning with artificial neural networks”.

How a neuron computes

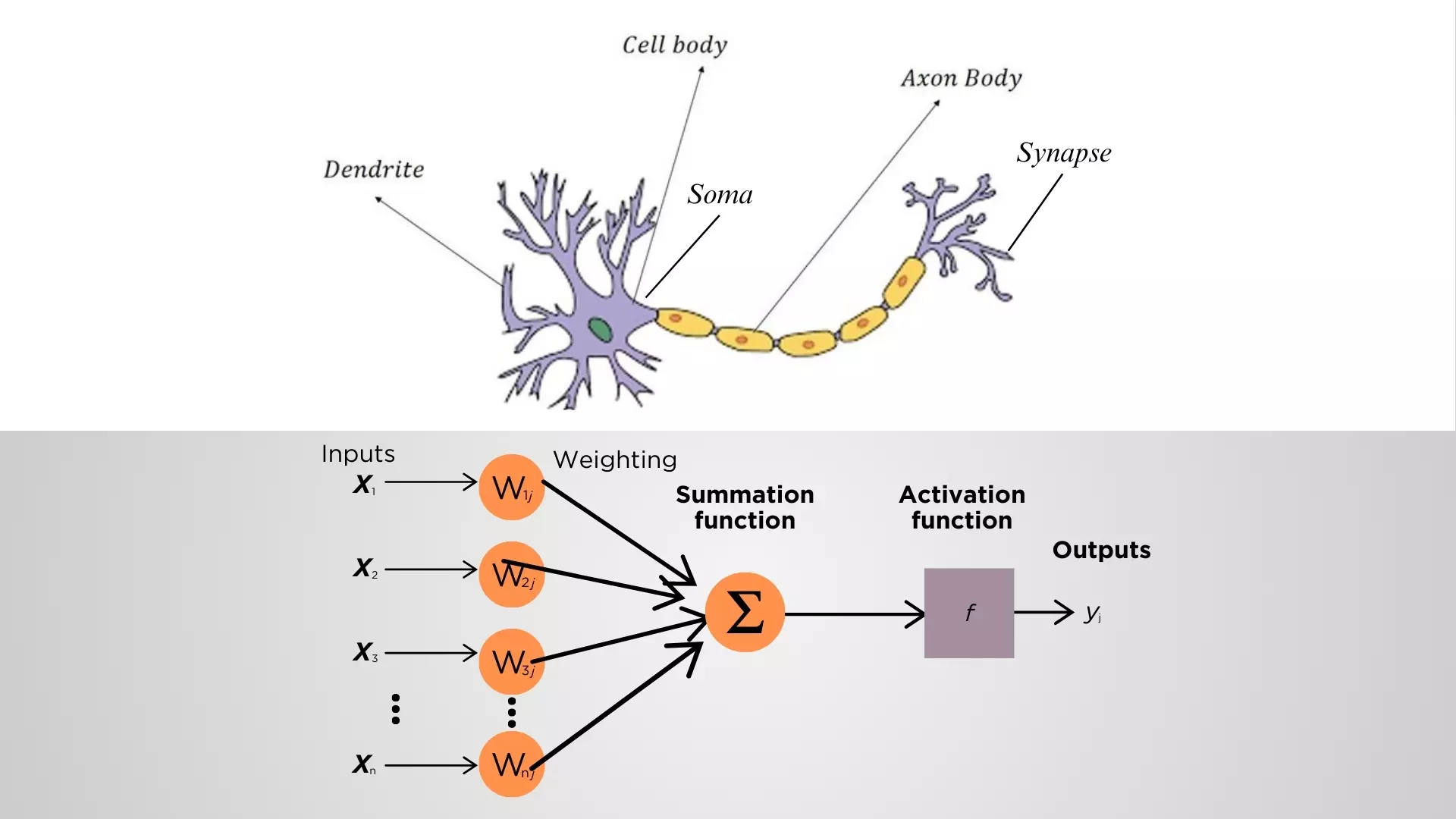

Three major parts comprise a neuron: dendrites, an axon, and a cell body or soma, just like branches, roots, and a trunk are present in a tree. Messages are received from other neurones by dendrites, which are tiny, branching extensions of the cell body, similar to how recommendations are received from friends and relatives. The nucleus of the brain cell is contained in the cell body, known as the soma, where metabolic activity occurs. The recommendations are synthesised, and a decision is made, equivalent to processing information acquired by the dendrites in the soma. The axon is initiated by soma. If triggered, the axon delivers electrical signals known as action potentials to neighbouring neurons. The axon terminals or synapses carry signals from one neuron to another.

Artificial neurons mimic the function of biological neuron, with input, processing and output.

In the McCulloch-Pitts model, signals may be received by a neuron from its surrounding neuronal cells. The impulses can then be mixed and delivered to other neurones. However, the neurons weigh signals from different neighbours differently, just as Rubina was given more weight than Ram.

You make a decision after considering the opinions of your friends and family. Similarly, in artificial and biological neural networks, neurones can integrate signals from their neighbours and send them to other neurones. When your friends' votes cross a threshold, you accept the recommendation; similarly, when the combined signals cross a threshold, the neuron fires. You choose to disregard the restaurant advice from your son due to a lack of appropriate recommendations from your friends. Likewise, when the combined signals fall below the threshold, neurones do not fire. This is a basic caricature of the McCulloch-Pitts neural model.

Artificial neural networks

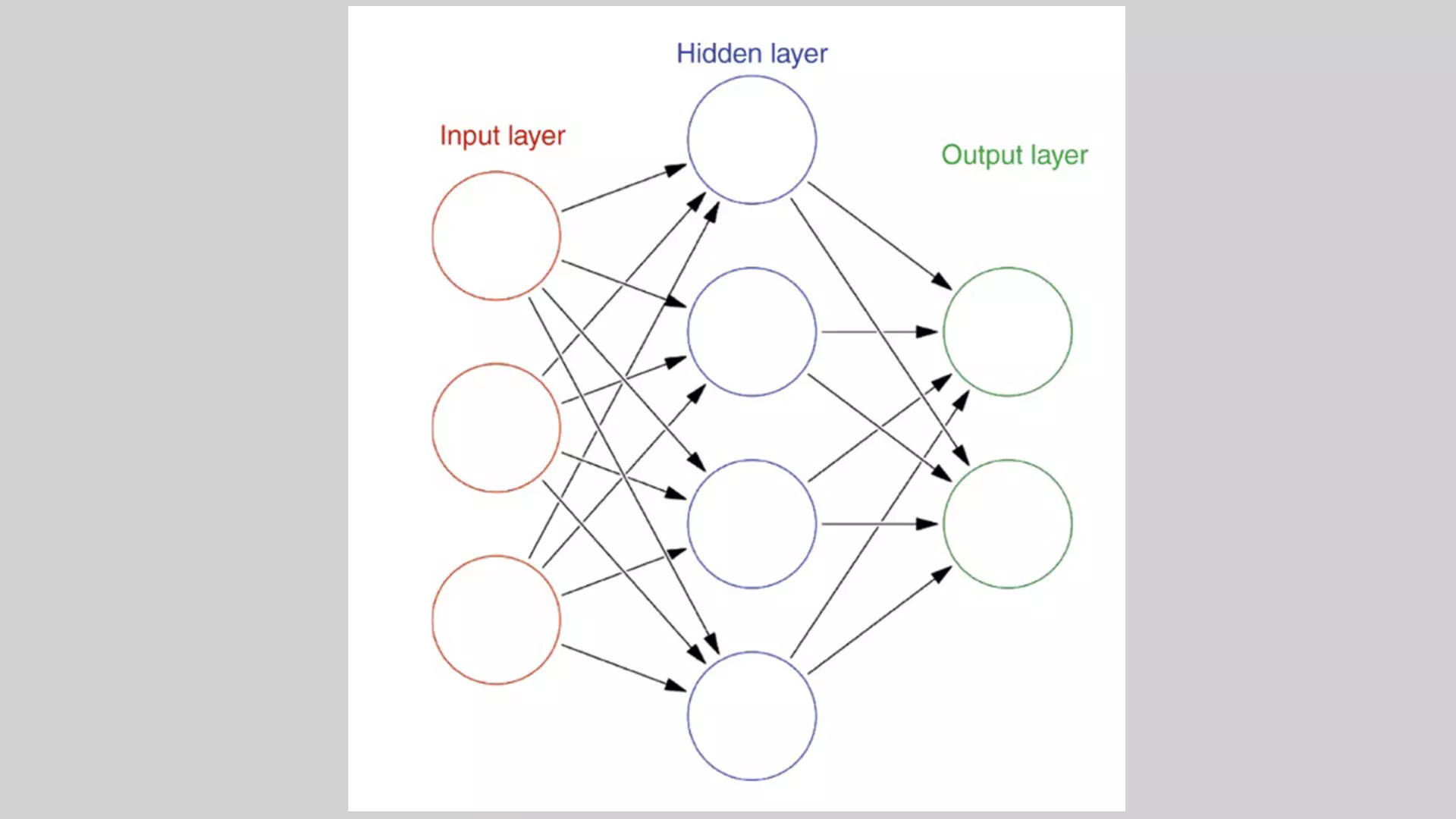

Artificial neural networks (ANN) are designed to behave similarly to neurons in the brain. While biological neural networks feature biological neurons, artificial neural networks use digital neurons/perceptrons coupled in various ways.

You decided on the restaurant to visit for your daughter's birthday celebration based on the signals supplied by your pals. At some point, your friends and family may seek your counsel. Perhaps Raakhi, your office colleague, will consider your suggestions, and your experience will now be valued. As you gain experience, Rubina may seek your counsel a few months later, creating a cyclical connection.

Furthermore, different occasions may necessitate an entirely different setting and cuisine. Over time, you and your friends will form collective opinions on various restaurants and cuisines served by them. 'This eatery has good pizza but poor coffee'. 'That one is pricy and also lousy food'.

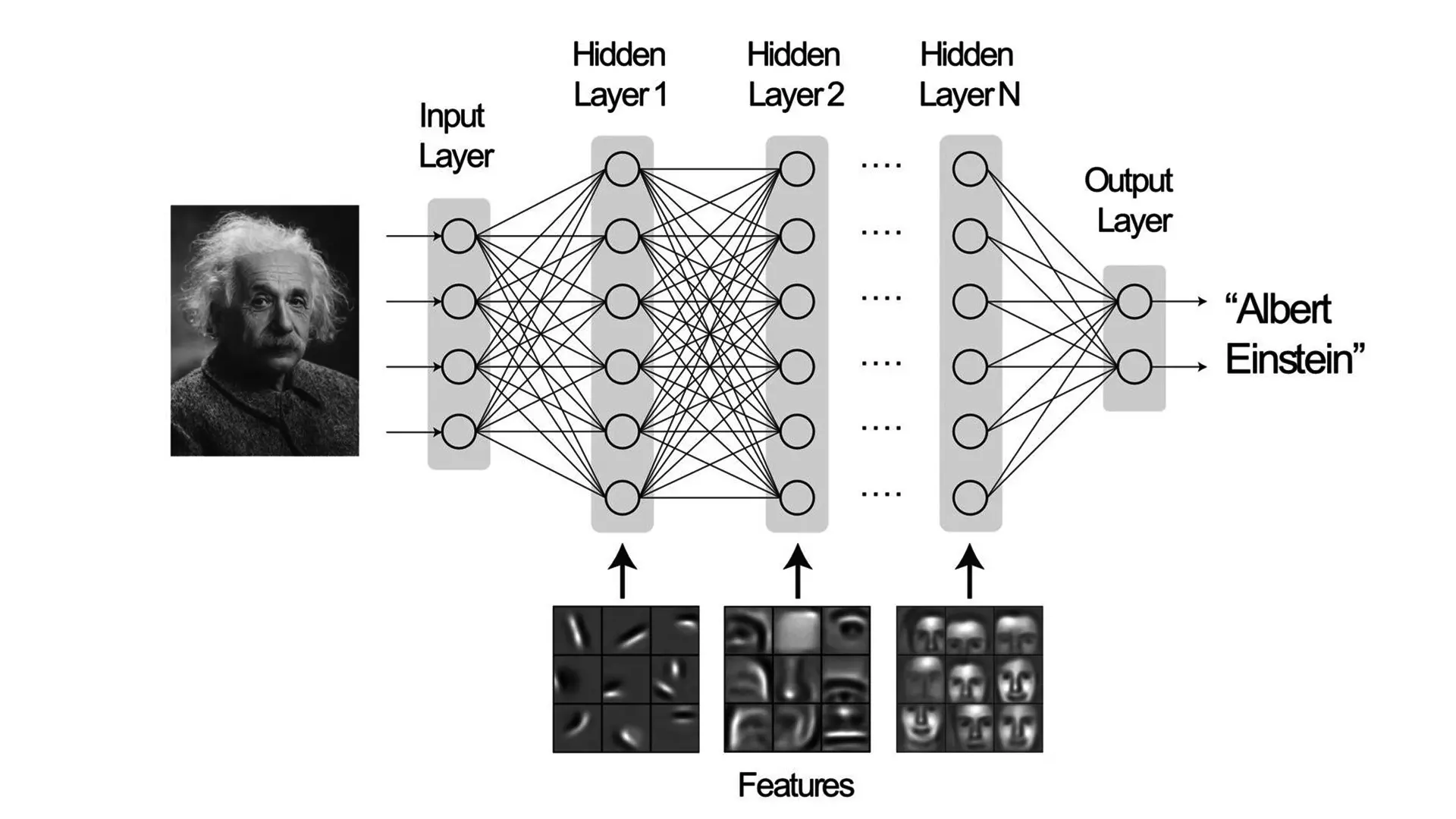

Each circle in this image is a 'neuron'. It receives inputs from 'neurons'/ 'nodes', sum the values and if the value is more than threshold, 'fire'. The network is activated by the input layer and finally the output is generated in the last layer.

The network between you and your friends and family is similar to a neural network. Each of you is a neuron, and your connection and weightage form the 'network'. As a network of foodies, you now have a pattern of good and poor eatery choices fit for many events and cuisines. Individual neurons may not always yield the best results like one person's recommendation. The magic is in the pattern and the strength of the links between them.

John Hopfield, a physicist, investigated recurrent neural networks using physics principles. In the recurrent neural networks, the data is fed back and reprocessed in a neural network many times. He focused on their dynamics: what happens to the network over time?

Hopfield network

A Hopfield network is an artificial neural network that can be analogised to how a group of friends might remember a shared event, such as selecting the ideal restaurant.

Imagine you and your friends are discussing organising a year-end party for friends and family. Some of you recall elements from various restaurants and their menus, such as who has a more enormous dining hall, what cuisines are served, and how they taste. Even if some of you don't remember every detail, you may assist each other in filling in the gaps by sharing what you remember.

The Hopfield network resembles a circle of friends. Each friend functions like a neuron in the network. The memory of the many food establishments is similar to the patterns that the Hopfield network stores. Talking about the party is like activating the network; everyone brings up what they recall. Even if certain elements are missing or muddled up, this discourse will allow you to recreate your memory of the experiences collaboratively.

In summary, the Hopfield network stores memories (patterns) in such a way that it can recover them even if the input is fragmentary or noisy (for example, if you only recall components of the restaurants and their menus). It exploits neuron connections (similar to friendship connections) to aid in retrieving entire memories.

Backpropagation

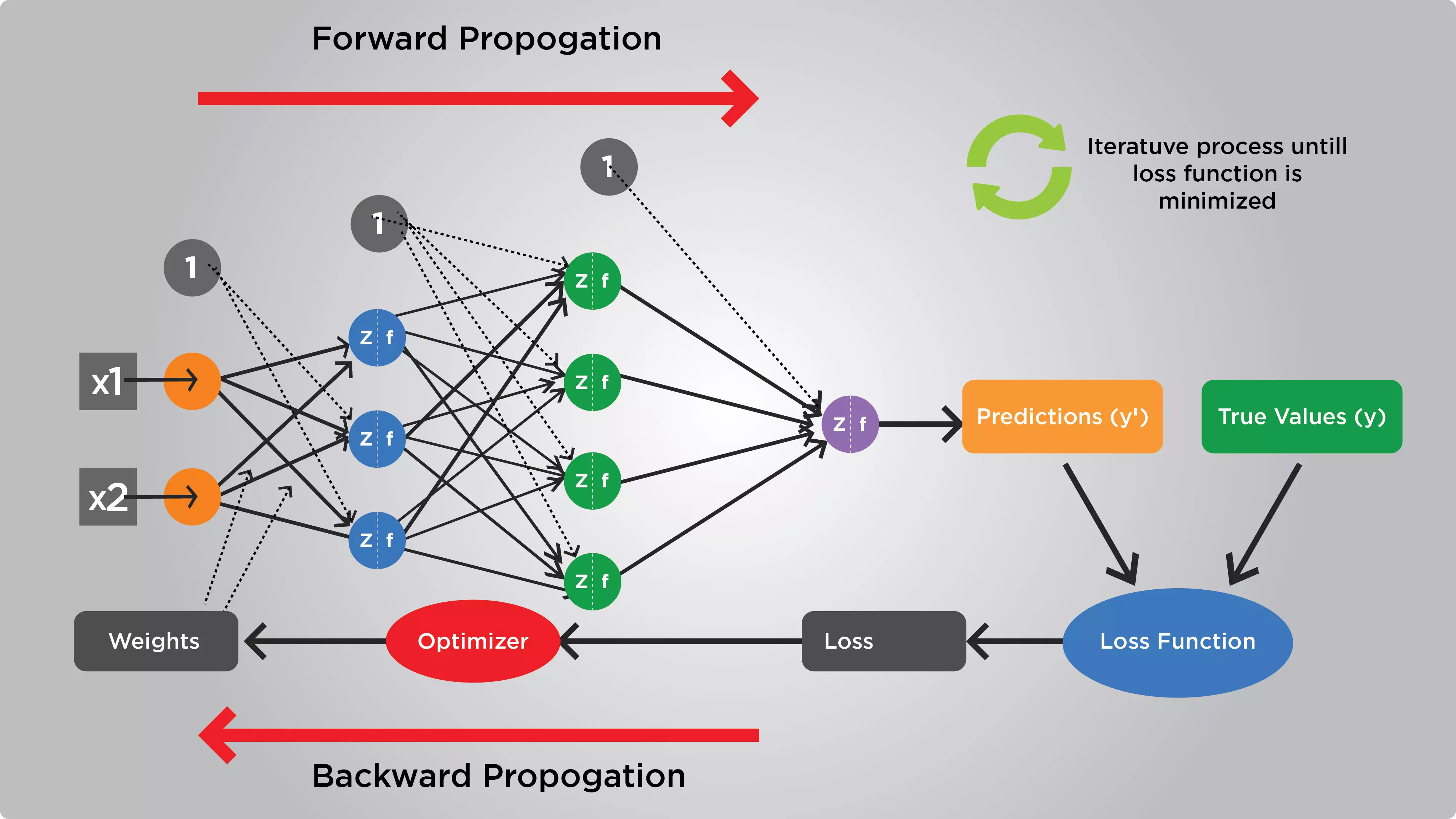

Geoffrey E. Hinton, David E. Rumelhart, and Ronald J. Williams released a landmark work titled 'Learning representations by back-propagating errors' in 1986. They popularised this learning technique, allowing them to repeatedly alter the weights of the network's connections to minimise the disparity between the actual and desired outputs.

You rejected the restaurant because you gave Rubina aunty a 3 on the dining preferences. However, you may realise that she is not always as strong with all dishes. Perhaps Ryan has more refined preferences in Chinese cuisine, whilst Raakhi excels at pizza. You now realise you must reduce her weight from 3 to 2.8 to fit your experience. Similarly, you may discover that Robert is also a connoisseur of fine food when it comes to biryani, and his recommendations are roughly 1.5 times more valuable than grandpa Ram's. Over time, based on your experiences, you alter the weightage to achieve an optimal

This is called backpropagation. Based on the ultimate result, you review the previous phases and make improvements. If you want artificial neural networks to do exciting tasks, you must determine the appropriate weights for the connections between artificial neurones. Backpropagation is essential for selecting weights based on the network's performance on a training dataset.

Training the deep neural network helps. For example when we type in Whatsapp autocompletion and autocorrection of words takes place, this is training. At first it is irritating but later your Whatsapp is so well trained that it saves typing time.

The weights of each connection is adjusted until the predicted value and the true value become nearly same.

Deep neural network (DNN)

Let us look at the restaurant decision analogy. First, you decide on your cuisine, which involves your partner and two children. Once you've decided on a dish, you seek recommendations from friends for suitable eateries.

Aside from input and output, this network has two layers. You start by deciding on a cuisine and then narrow down your options. However, there are other considerations. Some of the places you've selected are far away, but the tables tend to be readily available; others are closer, but the wait time is longer. You weigh in. Then there's one location with a pleasant atmosphere appropriate for a birthday celebration. Another establishment where the cuisine is excellent. The third one is a happening place. Some are expensive, while others are affordable.

The restaurant options can be organised into modules, each focusing on a specific aspect. Modules for cuisine, affordability, ambience, hygiene, waiting time, distance, and so on can work simultaneously. Partial shortlisting is done layer by layer before a final decision is reached at a higher level. If the eatery is too expensive, the module will respond negatively, bringing the computation to a halt at that point. If the cost is reasonable, the computation will proceed.

The network is triggered by the event for which we are looking for a location to eat out and concludes with an output layer that advises accepting or rejecting a specific hotel as the preferred venue for the selected event. This is an example of a simplified representation of deep networks. The massively parallel network in the deep neural network ensures that tasks are divided. Each neuron only fires when its intended criteria are met, allowing the group to reach a decision.

The layered architecture of the human visual cortex, which turns the signal received from the retina into a meaningful perception, inspired the construction of the deep neural network. Hinton and neuroscientist Terry Sejnowski created an algorithm that allows deep neural networks to form. Deep neural network (DNN) are many layers of neurons in sequence.

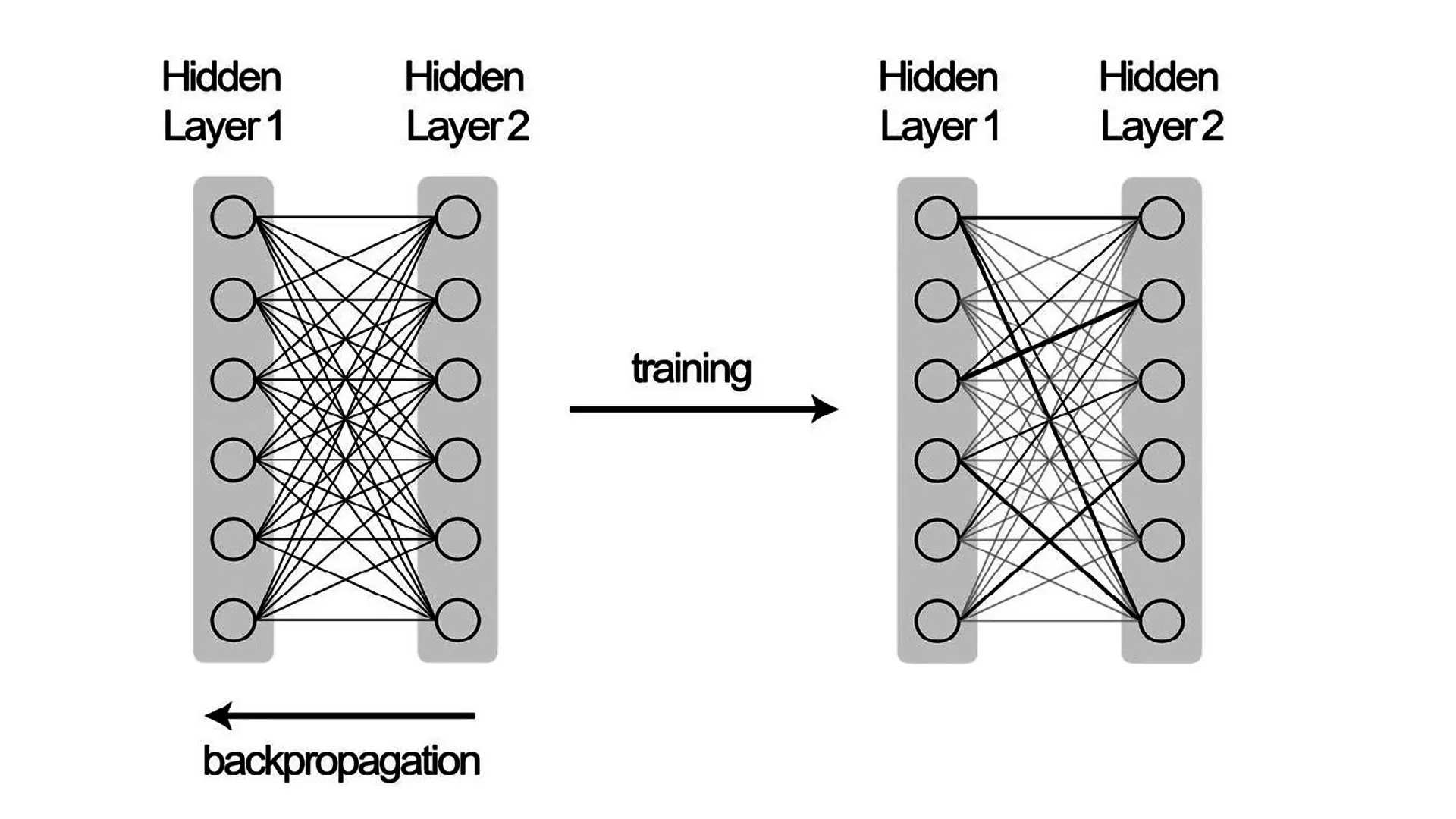

All network connections were initially assigned equal strength as if one individual had one vote. However, their connection strengthens when two neurones fire together in response to an input. Conversely, rarely utilised connections wilted. In AI jargon, the process of strengthening some connections while weakening others is referred to as 'training'.

The leftmost layer encodes the input, which in this case is faces. The rightmost layer produces the result, in this example, if the photo is of Albert Einstein. Training with millions of labelled trial faces helps to trim and perfect the weights between neurones. During each trial, backpropagation is used to alter the connection weights to obtain the desired output. After enough training, the network matures to the point where each subsequent layer in the neural network learns to recognise and accurately classify progressively complex features (e.g., lips, nose, eyes, and so on) in faces.

Cat’s eye view

In the 1970s, Colin Blakemore and his colleagues dissected cat brains at various embryonic stages. They discovered that the visual cortex grows in layers in response to sensory data. Blakemore and Cooper (1970) undertook an experiment in which they created two unusual cylinders, one with just vertical stripes and the other with only horizontal stripes. They spent the first few months raising newborn kittens in one of the two cylinders. The kittens were not exposed to vivid images of the natural world. They demonstrated that kittens raised in cylinders with vertical lines could recognise vertical lines but not horizontal ones throughout their lives. Likewise, the other half of the sample raised in opposite conditions with horizontal lines could only perceive horizontal but not vertical lines.

In 2005, Hinton and colleagues developed a radical training regimen influenced by this findings. Hilton and his colleagues discovered that each layer may be trained separately, just as the neurones in cat eyes were trained to distinguish between vertical and horizontal lines. The output from the trained layer in fed into the follwoing layer. Now the next layer is trained. By training layer by layer, they demonstrated that learning may be far more efficient.

With training some connections gets strengthen (bold lines) and others weakened (light lines). The output from the network is now closer to true value.

A first layer of neurones will learn how to detect simple features, such as an edge or a contour, after being bombarded with millions of data points. Once the layer has learnt to recognise these items accurately, it is passed to the next layer, which trains itself to detect more complex features, such as a nose or an ear. Then that layer is sent into another layer, which trains itself to recognise even higher levels of abstraction, and so on, layer by layer—hence the "deep" in deep learning—until the system can consistently recognise incredibly complex phenomena, such as a human face.

Controversy

In their citations, the Royal Swedish Academy of Sciences emphasised the application of physics-related ideas in developing the deep neural network and AI's enormous contribution to physics research. However, some physicists are outraged that the Nobel Prize has been awarded to two computer scientists.

It is also worth noting that Hinton recently quit Google to raise awareness of the possible threat of AI to human society. While AI would result in significant gains in healthcare, better digital assistants, and massive productivity increases, he cautioned, “But we also have to worry about a number of possible bad consequences, particularly the threat of these things getting out of control.”