- Home

- India

- World

- Premium

- THE FEDERAL SPECIAL

- Analysis

- States

- Perspective

- Videos

- Sports

- Education

- Entertainment

- Elections

- Features

- Health

- Business

- Series

- In memoriam: Sheikh Mujibur Rahman

- Bishnoi's Men

- NEET TANGLE

- Economy Series

- Earth Day

- Kashmir’s Frozen Turbulence

- India@75

- The legend of Ramjanmabhoomi

- Liberalisation@30

- How to tame a dragon

- Celebrating biodiversity

- Farm Matters

- 50 days of solitude

- Bringing Migrants Home

- Budget 2020

- Jharkhand Votes

- The Federal Investigates

- The Federal Impact

- Vanishing Sand

- Gandhi @ 150

- Andhra Today

- Field report

- Operation Gulmarg

- Pandemic @1 Mn in India

- The Federal Year-End

- The Zero Year

- Science

- Brand studio

- Newsletter

- Elections 2024

- Events

- Home

- IndiaIndia

- World

- Analysis

- StatesStates

- PerspectivePerspective

- VideosVideos

- Sports

- Education

- Entertainment

- ElectionsElections

- Features

- Health

- BusinessBusiness

- Premium

- Loading...

Premium - Events

Chandrayaan-3 mission: Why is it all black and white on the Moon? Or is it not?

The Moon looks dull and greyish in many images taken by the Pragyan rover and Vikram lander of India’s Chandrayaan-3 mission. Why are the photos, particularly taken by the Pragyan monochrome (black and white). Why does the lunar surface appear in various hues of grey?The lunar surface appears grey in this image taken by the Lander Horizontal Velocity Camera taken on August 23,...

The Moon looks dull and greyish in many images taken by the Pragyan rover and Vikram lander of India’s Chandrayaan-3 mission. Why are the photos, particularly taken by the Pragyan monochrome (black and white). Why does the lunar surface appear in various hues of grey?

The lunar surface appears grey in this image taken by the Lander Horizontal Velocity Camera taken on August 23, 2023.

There are multiple cameras fitted on the Vikram lander and Pragyan rover. The Vikram hosts primary and redundancy Lander Hazard Detection & Avoidance Cameras (LHDAC), the Lander Position Detection Camera (LPDC), the Lander Horizontal Velocity Camera (LHVC) and four lander imaging (LI 1-4) cameras. The Pragyan rover is mounted with two NavCam cameras in the front. Except for the four lander imaging (LI) cameras, all others produce only a monochrome image. The monochrome cameras are used for location detection, safe spot identification for landing, navigation and negotiating the lunar terrain.

Therefore, the images taken by the Pragyan and the navigational cameras are monochrome. However, the pictures taken by the Lander Imaging Cameras are colour (RGB). Nevertheless, even in the colour picture, taken by the LI camera, like the one below, the lunar soil is just grey. Why this is so, we will explain later.

In this image taken by one of the LI Camera, the Pragyan is colourful, but the lunar surface appears black and white.

Now, why did the ISRO choose monochrome for navigational cameras? Monochrome is best suited for hazard detection, location identification and navigational purposes.

To understand why this is so, let us explore how the Lander Hazard Detection and Avoidance Camera (LHDAC) works. It captures an image and extracts information using an AI algorithm to identify perilous craters, dangerous rocks and slippery slopes. The operation of the AI-powered algorithm of the LHDAC is similar to the Face Unlock mobile phone app.

How does your mobile recognise you?

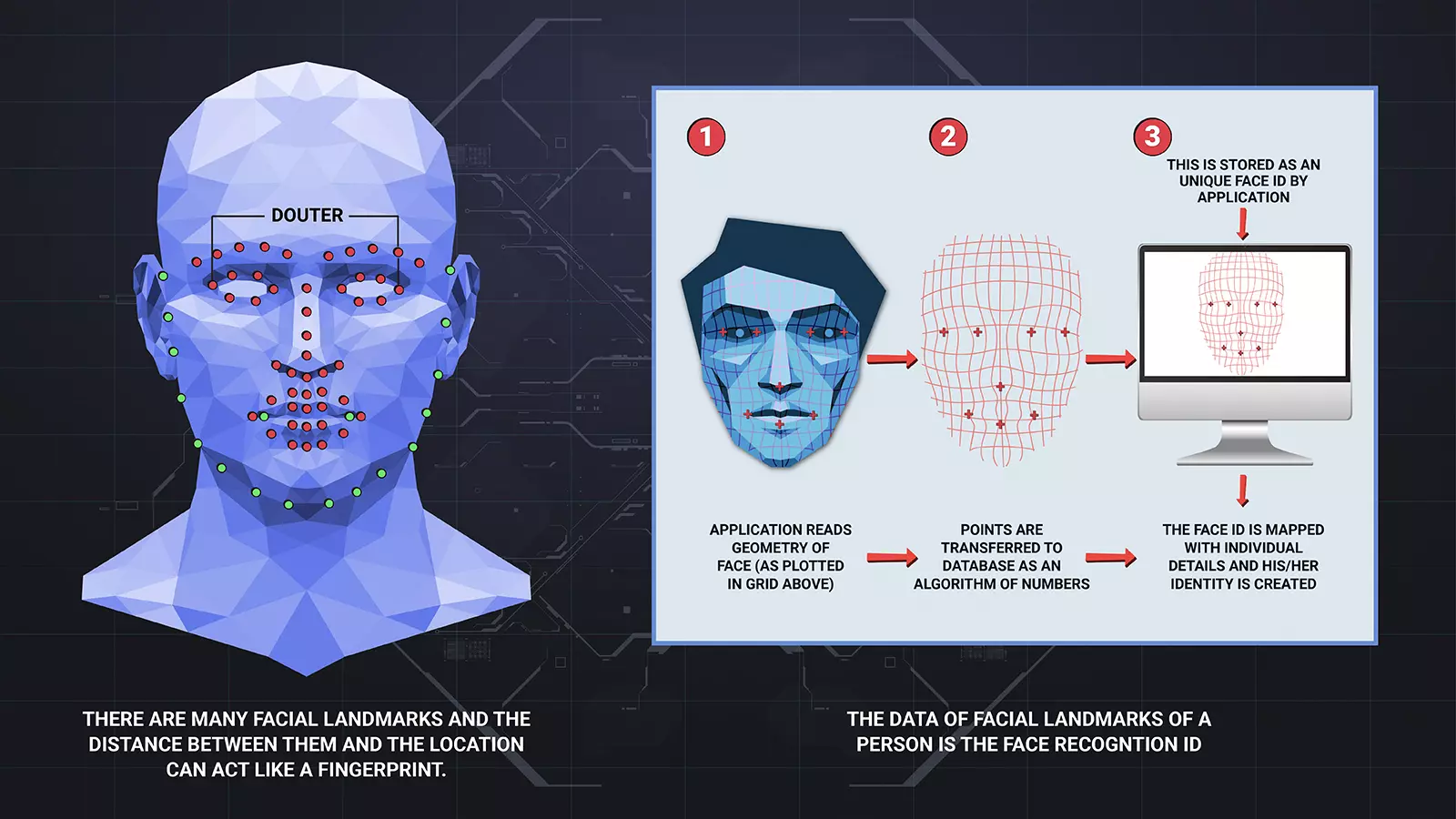

How does face recognition software work? First, an image of your face is captured and digitised. Each pixel on the image corresponds to some point on your face. From this array of pixels, distinguishable facial landmarks are identified. For example, your nose protrudes while the eyes are recessed. The facial features are made by several such peaks and valleys. Each of these landmarks is called a nodal point.

Now, using these facial landmark points, we can measure the distance between the eyes, the width of the nose, the depth of the eye sockets, the shape of the cheekbones, the length of the jawline, the shape of the cheekbones, the distance from the forehead to the chin, the distance between the nose and mouth. Like fingerprints, no two faces are the same. Therefore, the facial landmark data set for each face will be unique.

In smartphones, the face unlock app works using this. When we run the app for the first time, it asks for our selfie. From the selfie image, these features are extracted by the app. These are stored as your face ID. Next time you show your face to the mobile camera, it compares the image stored and the image now appearing before it. If there is a match, then it unlocks or remains locked.

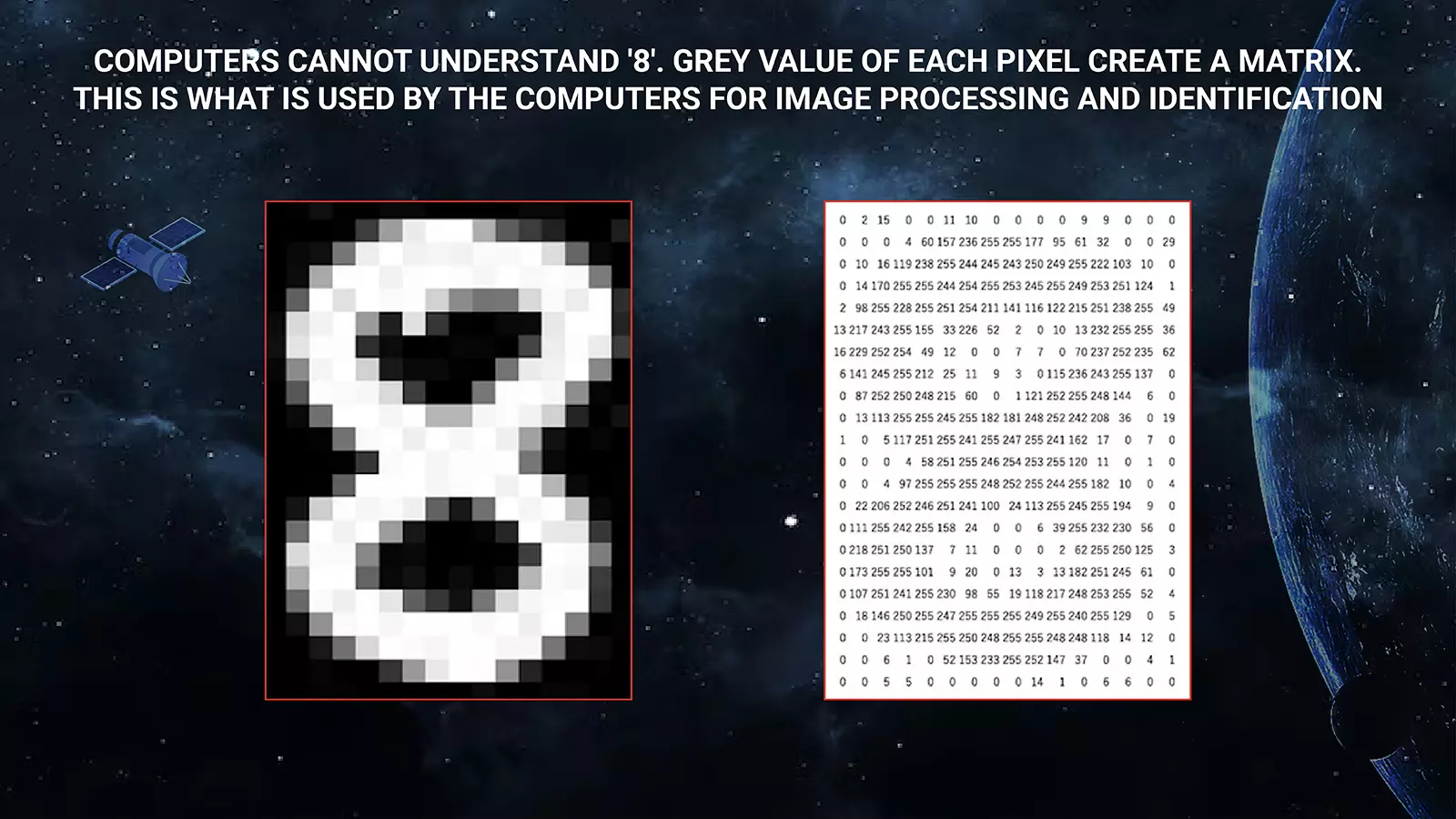

Computer algorithms do not know ‘nose’ or ‘ears’. Therefore, the algorithm must detect the edges accurately to extract facial landmarks. How does edge detection work? Digital images contain pixels. Each pixel is in some shade of grey. If the pixel is black, it is given the value 0; if it is white, it is given the value 255. That is, 256 grey intensities are distinguished.

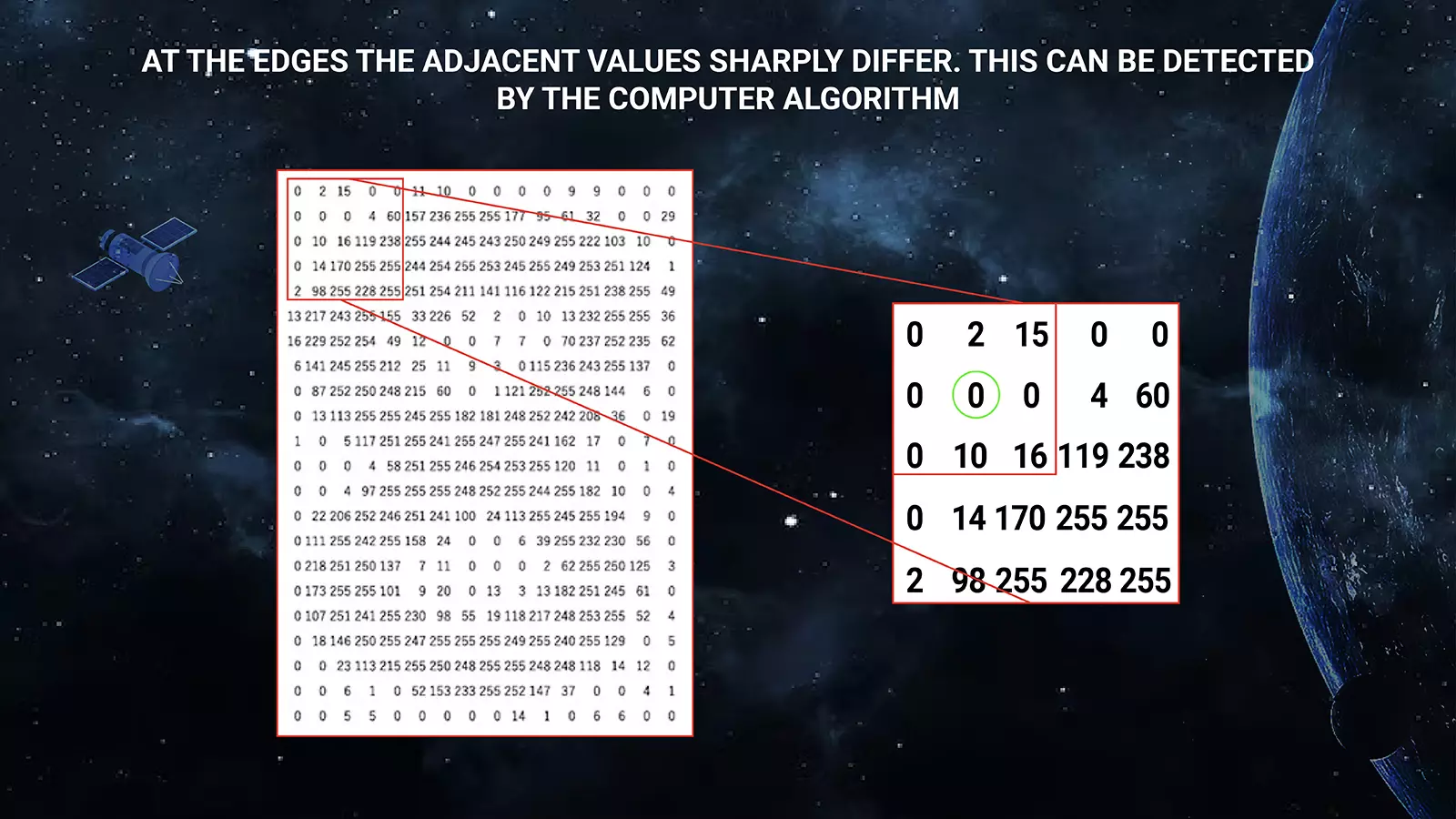

Now, look at the above picture. Each pixel of the 8 is converted into greyscale values. These values are arranged in a matrix. Closely examine the greyscale values. Can you recognise the edges? Now compare the greyscale values of pixels at the edges with their adjacent. At the edges, the values of neighbouring pixels differ significantly. Now, computer algorithms can recognise this.

One standard method is to pick adjacent 3 x 3 matrices and compare them. Called the moving average, the value of each pixel is replaced with the average value of the 3 x 3 matrix surrounding it. Alternatively, in the image segmentation process, all pixels with a given intensity (say 100) are replaced with a white pixel (intensity 255) and all those having values less than that given intensity are replaced with a black pixel (intensity 0). Both provide a sharper image, and the edge identification becomes more straightforward.

The facial landmarks are edges. Thus, an insentient algorithm can identify the edges, that is, facial features, without ‘knowing’ the ‘nose’ or ‘eye’.

Colour image processing

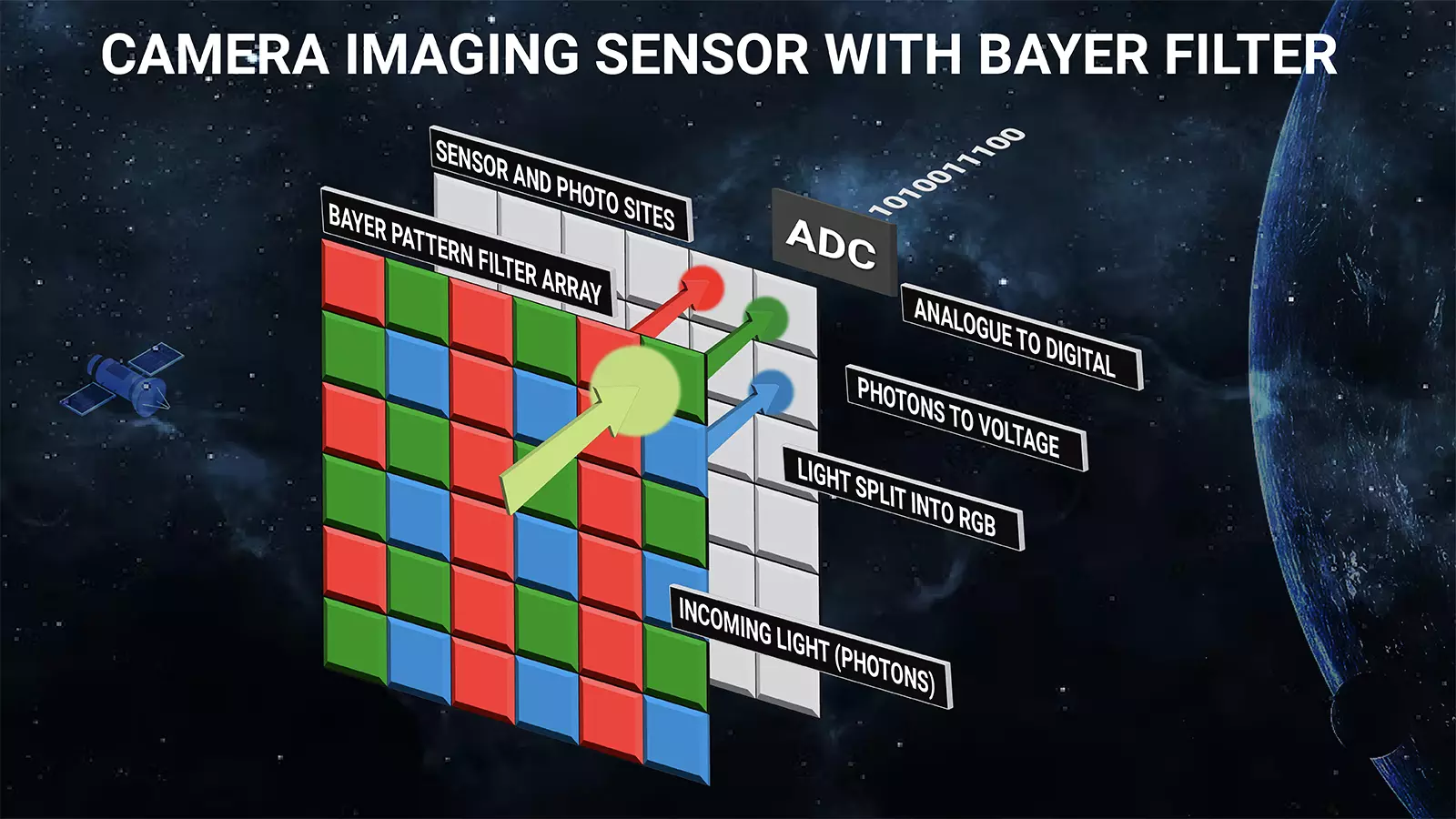

The above explains how the monochrome image is used to extract features. What about colour images? How does that work? In a digital colour camera, the intensity of Red, Green and Blue (RGB) are measured for each pixel. Again, the values are between 0 and 255 for each of these. In the case of red, 0 means black and 255 implies red.

Each pixel has three values in a colour image but just one in monochrome.

Face detection algorithms such as OpenCV, Neural Networks, and Matlab often transform the picture from RGB to greyscale. Better contrast is obtained with greyscale, which makes it easy to detect the edges and facial landmarks.

Training the algorithm

The early face recognition software was based on human knowledge. We know that a face must have a nose, eyes, and mouth within certain distances and positions with each other. However, this method of building a Knowledge-Based algorithm has limitations. A big problem is creating appropriate rules. If the rules were too general, we would have more false positives; if too strict, we would have more false negatives.

Today, most algorithms use what is known as machine learning techniques, such as the Convolutional Neural Network (CNN) Model for Face recognition. Like training a dog in a set of commands, the algorithm is trained by inputting different facial images. The algorithms crunch the pixel values and identify a pattern in the multiple images of the same person. Over time, with training, it can also identify differences between the faces of two people. After the training, the algorithm is tested to see how reliable it is in recognising facial images of the same person and distinguishing one person’s face from another. The algorithm is deployed only if it passes this test. If the rate of success is unsatisfactory, it is sent for re-training.

LHDAC algorithm

LHDAC works on a similar principle. In this case, the task is not to identify faces, but craters larger than 1.2 metres, boulders bigger than 28 centimetres and slopes steeper than 10 degrees. These are the tolerance levels for a safe landing spot. Like the face recognition machine learning algorithm picks up facial landmarks and detects, the LHDAC artificial intelligence-powered algorithm extracts features of craters, boulders and slopes from the training data. Edge detection is the key to these. The craters have some distinct characteristics; they are mainly circular or elliptical in shape and have a rim at their edges. Further, the craters, boulders, and slope cast sharp shadows when the Sun is at a low angle.

From the size of the edges and shape of the shadow, the algorithm can compute the diametre of a crater and its depth. Likewise, the shadow cast by the boulder can be used to estimate its height. From these, the algorithm can detect safe spots bereft of larger carters, oversized rocks and steeper slopes.

Neural Network Machine Learning trains the LHDAC algorithm as in modern face recognition software. Either close-up images of the Moon taken by the orbiters or a mock-up lunar surface will be presented as training data for the algorithm to extract features of a crater, slope and boulders, using its shape and shadow. Once the algorithm is trained and proven successful in the test, it is deployed for a soft landing on the Moon.

Why colour image is not best suited?

With 8 bits we can represent 256 values, that is from 0 to 255 of the monochrome pixel. Therefore 8 bits per pixel are adequate for monochrome image. However, we need to encode three sets 0-255 for each of the RGB for a colour image. To encode three sets of 0-255, we will need 3 x 8 bits, that is 24 bits per pixel. This implies using a colour image will be computationally costly. For a given processor, the time taken for feature extraction and image identification of colour images will be much greater than from monochrome images. Further, the monochrome images have sharp contrast, enabling easy edge detection. Therefore, even if colour cameras are used, one must forcefully convert the image pixel to greyscales before processing them, as in our mobile phone face lock-up app. Greyscale images are adequate to crater circles on the Moon and estimate the range of the Moon's surface from the lander.

The cameras deployed in the Vikram lander are digital; they either use the active-pixel sensor (APS) or charge-coupled device (CCD) to detect the intensity of light falling on each pixel. In these devices, the light, that is, photons, falling on the pixel is converted into electrons. This photocurrent is amplified to create a digital data. This digital data is manipulated in the image processing. An array of 1024 x 1024 pixels provides us a one-megapixel quality image.

If the edges are smudged in the image, it is hard for the algorithm to detect them and use it to identify the object. A suitable resolution is a prerequisite for image recognition to work. The image resolution depends on the photocurrent produced in each pixel for a given image size. The photocurrent produced at the photodetector is proportional to the intensity of incident light. In short, we get a better resolution if the incident light intensity increases. That is why the photographs taken in low light are blurred. The more the incident light intensity, the more will be the current, and better will be the resolution.

Filters are used to separate RGB and one third of the pixels are devoted to each of them. The incident light is converted into electrons by the photodetector. This is suitably amplified and digital data is obtained.

High resolution and good contrast

The colour images are obtained by using RGB filters. When the red filter is used, light of specific wavelengths passes through the filter and falls on the pixel. That is, the wavelengths pertaining to green and blue are discarded. In short, only one-third of the incident light falls on the photodetector. However, in the case of the monochrome, all the incident light falls on the photodetector, providing a much higher resolution image as compared to the colour image. While the monochrome gets the total light, each of the RGB, pixel gets only one third. For feature identification colour images do not greatly assist, but have the drawback of only 1/3rd of the incident light captured by each of the RGB sensors. That is why feature identification cameras usually are monochrome.

The Lander Position Detection camera, the Lander Horizontal Velocity Camera, and the Lander Hazard Detection and Avoidance camera are all used to identify features on the lunar surface. The same is the case with the NavCam of the rover. The images from two front-facing cameras mounted on the Pragyan rover are processed, features extracted, and the terrain’s elevation computed and used for vision-based navigation. Which is why all these cameras are monochrome. The images obtained by this camera are black and white. However, the lander imaging cameras on the Vikram are different. They are used to image the lunar surface. Therefore, they use colour imaging.

But why does the lunar surface appear grey even in a colour image?

The images taken by the Lander Imaging Cameras show Pragyan rover’s colour and Vikram lander’s visible parts. However, the lunar surface is grey. Why is that? Simple; the lunar soil lacks colour.

The soil on Earth is very colourful. It may be brown, red, yellow, black, grey, black, white, blue or green and dramatically vary across landscapes. The organic matter, iron, manganese and other minerals chemically interacting with water and the atmosphere create compounds. These compounds are the leading colouring agents of soil on Earth. For example, both iron and manganese oxidise due to weathering. The oxidised iron forms into tiny yellow or red crystals. If the soil contains high levels of decomposed organic matter, humus, it appears dark brown to black. Without these compounds, the soil will appear grey.

Moon has no atmosphere or running water. Therefore, there is no weathering. Hence, the natural colour of the lunar soil, called regolith, is mainly various shades of grey. It is light grey in the light highlands and dark grey in the basaltic flood plains. But that is at first sight. If one looks closer at the colour images of the lunar soil, we will find titanium-rich areas having a blueish tint, regions poor in iron orangish and regions rich in olivine with a touch of green. This is why the lander imaging camera takes colour images. We can read the finer details of mineral distribution from high-resolution colour images.